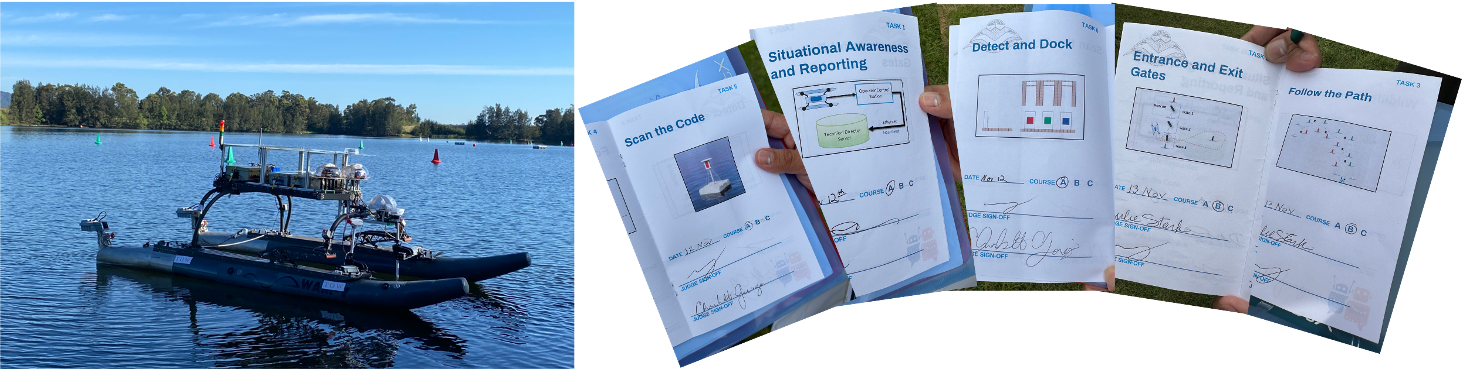

We participated in the 2022 Maritime RobotX Challenge and awarded 3rd place at the final stage. We built a heterogeneous USV and UAV team to solve

multiple tasks including autonomous docking, navigation, obstacle avoidance, UAV launch & recovery, scan the code, racquetball flinging and acoustic pinging.

simulator; achieved sim-to-real results for goal navigation and collision avoidance

the state of WAM-V with Python, C++ and ROS

as well as cooperating with UAV

for communicating with competition organizer